In the world of machine learning (ML), managing the full lifecycle of data from ingestion to model deployment can be overwhelming. Building backend AI pipelines that automate and streamline this workflow is crucial for scaling AI systems and ensuring the quality and consistency of results.

In this article, we’ll explore the 10 critical steps to create automated and efficient AI pipelines, allowing you to simplify the complexities of ML workflows and boost productivity.

1. Data Collection and Ingestion

Every AI pipeline starts with data collection. Before building a model, you need access to the right data, whether it’s from databases, APIs, or third-party sources.

Automating the ingestion process involves setting up connectors or data streams that pull data from various sources into a centralised system. Tools like Apache Kafka, AWS Kinesis, or Google Cloud Pub/Sub are popular for real-time data ingestion, ensuring your pipeline stays up to date with fresh information.

Tip: Create a robust data validation layer to catch any issues during ingestion, ensuring only clean and relevant data flows into your pipeline.

2. Data Preprocessing and Cleaning

Once the data is ingested, the next step is data preprocessing. Raw data is often messy, with missing values, duplicates, or irrelevant features. Automating this step can save countless hours in manual cleaning.

Use libraries such as Pandas, NumPy, or Apache Spark to automate:

- Removing duplicates

- Handling missing values

- Feature scaling and encoding

Pro tip: Implement data validation rules to catch inconsistencies in your datasets before they reach the model training phase.

3. Data Transformation and Feature Engineering

Feature engineering is a critical part of any AI pipeline. It involves transforming raw data into features that can be used by machine learning algorithms. Automating the feature extraction process can help ensure consistency and speed in the pipeline.

Use frameworks like Apache Beam or Luigi to automate transformations such as:

- Aggregating data

- Generating new features based on domain knowledge

- One-hot encoding or normalisation

Example: If working with text data, use automated Natural Language Processing (NLP) techniques like tokenisation and stemming to prepare your features.

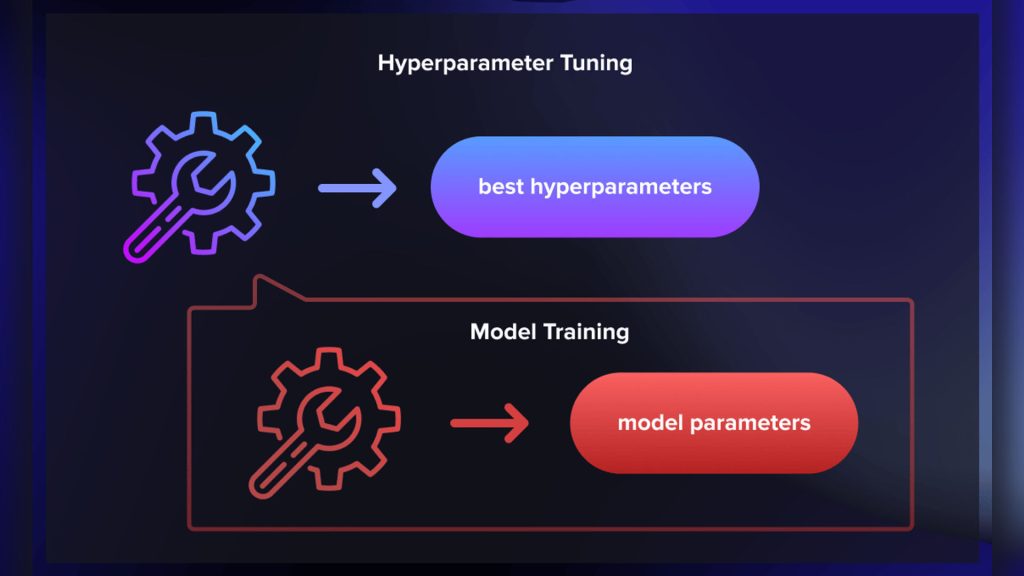

4. Model Training and Hyperparameter Tuning

Training machine learning models can be time-consuming and computationally expensive. To automate this step, set up pipelines that handle the entire process, from training the model to tuning its hyperparameters.

Use tools like MLflow, Kubeflow, or TensorFlow Extended (TFX) to automate:

- Model selection based on performance metrics

- Hyperparameter optimisation (using grid search, random search, or Bayesian optimisation)

- Continuous model training with new data

Tip: Implement automated versioning to track different model iterations and ensure that the best-performing models are deployed.

5. Model Evaluation and Testing

Once a model is trained, it’s essential to evaluate its performance. Automate testing by using a set of predefined evaluation metrics (e.g., accuracy, precision, recall) to assess the model’s effectiveness.

By automating this process, you can quickly identify underperforming models and iterate faster. For more complex models, implement cross-validation to ensure robustness.

Example: Use K-Fold Cross Validation to assess how well the model generalises to unseen data.

6. Model Deployment and Serving

Deploying a machine learning model to production involves making it accessible for real-time predictions or batch processing. Automating model deployment ensures you can move from development to production quickly and reliably.

Tools like TensorFlow Serving, TorchServe, and Seldon Core allow you to automate the deployment process by:

- Exposing the model as a REST API or gRPC service

- Managing model updates with versioning

- Scaling deployment based on demand

Tip: Automate rollback procedures to revert to previous model versions in case of issues.

7. Monitoring and Logging

Once deployed, it’s essential to monitor your AI models for performance in production. Automated monitoring and logging allow you to track:

- Prediction latency

- Model drift

- Errors or anomalies in predictions

Tools like Prometheus, Grafana, and ELK Stack can be used to gather and visualise metrics related to model performance. Integrating automated alerting ensures you can quickly respond to any degradation in service.

Pro tip: Set up model performance dashboards to keep stakeholders informed about how the model is performing over time.

8. Model Retraining and Versioning

AI models tend to degrade over time as new data emerges, a phenomenon known as model drift. Automating model retraining is essential to maintain performance as your data evolves.

Use pipelines that trigger automatic retraining when certain thresholds are met, such as:

- A decrease in model accuracy

- Significant changes in the input data distribution

You can use model versioning tools like DVC (Data Version Control) or MLflow to ensure that you always know which model is in production and can quickly roll back to a previous version if needed.

Example: Set up automated retraining on a weekly basis using fresh data from your ingestion pipeline.

9. Continuous Integration and Continuous Delivery (CI/CD)

Implementing CI/CD in your AI pipeline is crucial for automating testing, deployment, and rollback of machine learning models.

Use tools like Jenkins, GitLab CI, or CircleCI to:

- Run automated tests every time new code or data is committed

- Deploy updated models to production automatically

- Ensure that everything works seamlessly through automated testing and staging environments

Best practice: Automate the full testing and deployment process to minimise human error and speed up the delivery pipeline.

10. Data and Model Governance

As your AI pipeline grows, maintaining data and model governance becomes a priority. Automating data lineage tracking and model audit logs ensures compliance with regulations and provides transparency in your ML processes.

Use tools like Kubeflow Pipelines or Apache Airflow to create reproducible and auditable workflows that can be reviewed and monitored by all stakeholders.

Tip: Implement automated version control for datasets, models, and code to ensure full traceability.

Final Thoughts

Building backend AI pipelines that automate machine learning workflows requires thoughtful planning and the right tools. By automating key stages from data ingestion and preprocessing to model training, deployment, and monitoring you can build scalable, efficient, and maintainable pipelines that enable continuous innovation in your AI systems.

Whether you are looking to optimise existing workflows or build from scratch, following these 10 critical steps will ensure your pipeline is ready for the demands of production-grade AI systems.

If you need help building robust AI pipelines or automating your machine learning workflows, Dev Centre House Ireland offers expert solutions tailored to your specific needs, ensuring your system can handle both current and future challenges.

Ready to automate your AI workflows? Start building smarter, faster, and more scalable pipelines today.